Egocentric Visual Self-Modeling for Autonomous Robot Dynamics Prediction and Adaptation

We developed an approach that allows robots to learn their own dynamics using only a first-person camera view, without any prior knowledge! 🎥💡

🦿 Tested on a 12-DoF robot, the self-supervised model showcased the capabilities of basic locomotion tasks.

🔧 The robot can detect damage and adapt its behavior autonomously. Resilience! 💪

🌍 The model proved its versatility by working across different robot configurations!

🔮 This egocentric visual self-model could be the key to unlocking a new era of autonomous, adaptable, and resilient robots.

Abstract: The ability of robots to model their own dynamics is key to autonomous planning and learning, as well as for autonomous damage detection and recovery. Traditionally, dynamic models are pre-programmed or learned from external observations. Here, we demonstrate for the first time how a task-agnostic dynamic self-model can be learned using only a single first-person-view camera in a self-supervised manner, without any prior knowledge of robot morphology, kinematics, or task. Through experiments on a 12-DoF robot, we demonstrate the capabilities of the model in basic locomotion tasks using visual input. Notably, the robot can autonomously detect anomalies, such as damaged components, and adapt its behavior, showcasing resilience in dynamic environments. Furthermore, the model’s generalizability was validated across robots with different configurations, emphasizing their potential as a universal tool for diverse robotic systems. The egocentric visual self-model proposed in our work paves the way for more autonomous, adaptable, and resilient robotic systems.

Authors: Yuhang Hu, Boyuan Chen, Hod Lipson

Affiliation: Columbia University, Duke University

Paper Link: https://arxiv.org/pdf/2207.03386.pdf

Website: yuhang-hu.com

Видео Egocentric Visual Self-Modeling for Autonomous Robot Dynamics Prediction and Adaptation канала Yuhang Hu

🦿 Tested on a 12-DoF robot, the self-supervised model showcased the capabilities of basic locomotion tasks.

🔧 The robot can detect damage and adapt its behavior autonomously. Resilience! 💪

🌍 The model proved its versatility by working across different robot configurations!

🔮 This egocentric visual self-model could be the key to unlocking a new era of autonomous, adaptable, and resilient robots.

Abstract: The ability of robots to model their own dynamics is key to autonomous planning and learning, as well as for autonomous damage detection and recovery. Traditionally, dynamic models are pre-programmed or learned from external observations. Here, we demonstrate for the first time how a task-agnostic dynamic self-model can be learned using only a single first-person-view camera in a self-supervised manner, without any prior knowledge of robot morphology, kinematics, or task. Through experiments on a 12-DoF robot, we demonstrate the capabilities of the model in basic locomotion tasks using visual input. Notably, the robot can autonomously detect anomalies, such as damaged components, and adapt its behavior, showcasing resilience in dynamic environments. Furthermore, the model’s generalizability was validated across robots with different configurations, emphasizing their potential as a universal tool for diverse robotic systems. The egocentric visual self-model proposed in our work paves the way for more autonomous, adaptable, and resilient robotic systems.

Authors: Yuhang Hu, Boyuan Chen, Hod Lipson

Affiliation: Columbia University, Duke University

Paper Link: https://arxiv.org/pdf/2207.03386.pdf

Website: yuhang-hu.com

Видео Egocentric Visual Self-Modeling for Autonomous Robot Dynamics Prediction and Adaptation канала Yuhang Hu

Показать

Комментарии отсутствуют

Информация о видео

Другие видео канала

Mechanical Packaging Machine

Mechanical Packaging Machine Training Robot

Training Robot Columbia University Robotics Studio (Bipedal Robot)

Columbia University Robotics Studio (Bipedal Robot) Bipedal Robot Locomotion

Bipedal Robot Locomotion Knolling Bot: Learning Robotic Object Arrangement from Tidy Demonstrations

Knolling Bot: Learning Robotic Object Arrangement from Tidy Demonstrations Legged Robot Test 2020/10/14

Legged Robot Test 2020/10/14 A Legged Robot Learned to walk in Pybullet (Deep Reinforcement Learning)

A Legged Robot Learned to walk in Pybullet (Deep Reinforcement Learning) Training a Legged Robot to walk by Deep Reinforcement Learning

Training a Legged Robot to walk by Deep Reinforcement Learning Reconfigurable Robot Identification from Motion Data

Reconfigurable Robot Identification from Motion Data Legged Robot Wave

Legged Robot Wave Knolling bot 2.0: Enhancing Object Organization with Self-supervised Graspability Estimation.

Knolling bot 2.0: Enhancing Object Organization with Self-supervised Graspability Estimation. Walking Challenge for Bipedal Robot (Deep RL Algorithm)

Walking Challenge for Bipedal Robot (Deep RL Algorithm) Battery holder

Battery holder Hardware Design

Hardware Design Leg Modularity demonstrated

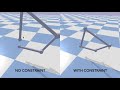

Leg Modularity demonstrated Create Constraint in Pybullet (Four-bar Kinematic Loop)

Create Constraint in Pybullet (Four-bar Kinematic Loop) Generative Design (Desk & Chair)

Generative Design (Desk & Chair) Robot Studio (Forbiddence)

Robot Studio (Forbiddence) Evolved Robot

Evolved Robot Run Away From Here

Run Away From Here