VARI-SOUND: A Varifocal Lens for Sound

VARI-SOUND: A Varifocal Lens for Sound

Gianluca Memoli, Letizia Chisari, Jonathan P. Eccles, Mihai Caleap, Bruce W. Drinkwater, Sriram Subramanian

CHI '19: ACM CHI Conference on Human Factors in Computing Systems

Session: Sound-based Interaction

Abstract

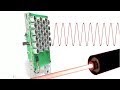

Centuries of development in optics have given us passive devices (i.e. lenses, mirrors and filters) to enrich audience immersivity with light effects, but there is nothing similar for sound. Beam-forming in concert halls and outdoor gigs still requires a large number of speakers, while headphones are still the state-of-the-art for personalized audio immersivity in VR. In this work, we show how 3D printed acoustic meta-surfaces, assembled into the equivalent of optical systems, may offer a different solution. We demonstrate how to build them and how to use simple design tools, like the thin-lens equation, also for sound. We present some key acoustic devices, like a "collimator", to transform a standard computer speaker into an acoustic "spotlight"; and a "magnifying glass", to create sound sources coming from distinct locations than the speaker itself. Finally, we demonstrate an acoustic varifocal lens, discussing applications equivalent to auto-focus cameras and VR headsets and the limitations of the technology.

DOI:: https://doi.org/10.1145/3290605.3300713

WEB:: https://chi2019.acm.org/

Recorded at the ACM CHI Conference on Human Factors in Computing Systems, Glasgow, Scotland, May 4 - 9 2019

Видео VARI-SOUND: A Varifocal Lens for Sound канала ACM SIGCHI

Gianluca Memoli, Letizia Chisari, Jonathan P. Eccles, Mihai Caleap, Bruce W. Drinkwater, Sriram Subramanian

CHI '19: ACM CHI Conference on Human Factors in Computing Systems

Session: Sound-based Interaction

Abstract

Centuries of development in optics have given us passive devices (i.e. lenses, mirrors and filters) to enrich audience immersivity with light effects, but there is nothing similar for sound. Beam-forming in concert halls and outdoor gigs still requires a large number of speakers, while headphones are still the state-of-the-art for personalized audio immersivity in VR. In this work, we show how 3D printed acoustic meta-surfaces, assembled into the equivalent of optical systems, may offer a different solution. We demonstrate how to build them and how to use simple design tools, like the thin-lens equation, also for sound. We present some key acoustic devices, like a "collimator", to transform a standard computer speaker into an acoustic "spotlight"; and a "magnifying glass", to create sound sources coming from distinct locations than the speaker itself. Finally, we demonstrate an acoustic varifocal lens, discussing applications equivalent to auto-focus cameras and VR headsets and the limitations of the technology.

DOI:: https://doi.org/10.1145/3290605.3300713

WEB:: https://chi2019.acm.org/

Recorded at the ACM CHI Conference on Human Factors in Computing Systems, Glasgow, Scotland, May 4 - 9 2019

Видео VARI-SOUND: A Varifocal Lens for Sound канала ACM SIGCHI

Показать

Комментарии отсутствуют

Информация о видео

Другие видео канала

Acoustic Metamaterials with Steve Cummer

Acoustic Metamaterials with Steve Cummer I Made a Lens, But for Sound

I Made a Lens, But for Sound Tour of JBL: The Sound Stage Speaker Testing

Tour of JBL: The Sound Stage Speaker Testing Metamaterial Mechanisms

Metamaterial Mechanisms Webinar: "Selecting the Right Camera for Vision Applications"

Webinar: "Selecting the Right Camera for Vision Applications" Acoustic Lenses

Acoustic Lenses

Turning Sound Into a Laser

Turning Sound Into a Laser Acoustic Lens - German

Acoustic Lens - German Quick "Pepper's Ghost" Illusion Demo

Quick "Pepper's Ghost" Illusion Demo Quad ESL57 outside experiment.mov

Quad ESL57 outside experiment.mov How Sound Works (In Rooms)

How Sound Works (In Rooms) The Acoustic Lens - English

The Acoustic Lens - English PWLTO#11 – Peter Sobot on An Industrial-Strength Audio Search Algorithm

PWLTO#11 – Peter Sobot on An Industrial-Strength Audio Search Algorithm What Does Sound Look Like? | SKUNK BEAR

What Does Sound Look Like? | SKUNK BEAR A PLANAR Speaker You Can Afford! - LRS REVIEW

A PLANAR Speaker You Can Afford! - LRS REVIEW Using lasers to create the displays of science fiction, inspired by Star Wars and Star Trek

Using lasers to create the displays of science fiction, inspired by Star Wars and Star Trek A Volumetric Display using an Acoustically Trapped Particle

A Volumetric Display using an Acoustically Trapped Particle First 3D printed lace test

First 3D printed lace test Lecture 32: Advantages and Applications of Membrane Type AMM

Lecture 32: Advantages and Applications of Membrane Type AMM