Backpropagation (Part 4): The Sigmoid Transfer Function and Its Derivative

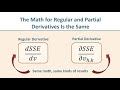

A differentiable transfer function, such as the sigmoid (logistic) function, is essential for the backpropagation training method for neural networks such as the Multilayer Perceptron (MLP). After re-acquainting ourselves with the chain rule from differential calculus (in vids 3a and 3b), we now apply the chain rule to taking the derivative of the transfer function with respect to the node inputs into that function.

Видео Backpropagation (Part 4): The Sigmoid Transfer Function and Its Derivative канала Alianna J. Maren

Видео Backpropagation (Part 4): The Sigmoid Transfer Function and Its Derivative канала Alianna J. Maren

Показать

Комментарии отсутствуют

Информация о видео

Другие видео канала

Backpropagation (Part 2): Mathematical Dependency and Creating the Word Problem

Backpropagation (Part 2): Mathematical Dependency and Creating the Word Problem Three Keys to Crafting a Compelling Research Paper: Series Intro

Three Keys to Crafting a Compelling Research Paper: Series Intro Backpropagation (Part 3a): The Chain Rule

Backpropagation (Part 3a): The Chain Rule Selecting References for Your Research Paper: Chicago Style Author-Date Formatting

Selecting References for Your Research Paper: Chicago Style Author-Date Formatting Structuring Your Research Paper Part 1

Structuring Your Research Paper Part 1 Writing Your Research Paper Problem Statement

Writing Your Research Paper Problem Statement Crafting a Compelling Research Paper - Pet Peeve: Significant Figures

Crafting a Compelling Research Paper - Pet Peeve: Significant Figures The New Good Stuff is at Themesis!

The New Good Stuff is at Themesis! Career-Boosting Reading List: Part 1 - Sun Tzu's "The Art of War"

Career-Boosting Reading List: Part 1 - Sun Tzu's "The Art of War" Crafting Your Research Paper Title Page

Crafting Your Research Paper Title Page Books 2 and 3: Great Get-Life-Together Reading!

Books 2 and 3: Great Get-Life-Together Reading! NLP: Clustering vs. Classification

NLP: Clustering vs. Classification Summed-Squared-Error (SSE): Neural Networks Back-Propagation X-OR Problem

Summed-Squared-Error (SSE): Neural Networks Back-Propagation X-OR Problem Christmas in Kaua'i - Mai Tais and AI: Part 1

Christmas in Kaua'i - Mai Tais and AI: Part 1 Writing an Effective Research Paper: Using Storytelling and Pictures

Writing an Effective Research Paper: Using Storytelling and Pictures Writing Your Research Paper Abstract to Get Reader's Attention - Part 1

Writing Your Research Paper Abstract to Get Reader's Attention - Part 1 Backpropagation (Part 3b): The Chain Rule (with Specific Application to the Transfer Function)

Backpropagation (Part 3b): The Chain Rule (with Specific Application to the Transfer Function) Math Bloopers! Dr. AJ's First Candid Blooper Reveal

Math Bloopers! Dr. AJ's First Candid Blooper Reveal Crafting a Compelling Research Paper - Pet Peeve: Figure Legibilitiy

Crafting a Compelling Research Paper - Pet Peeve: Figure Legibilitiy AI and Society: COVID-19 Accelerates AI and Robotics Use

AI and Society: COVID-19 Accelerates AI and Robotics Use