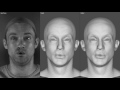

Speech and Facial Animation at SIGGRAPH 2017 -- comparison of technical papers

Comparison of three technical papers presented at SIGGRAPH 2017 in the "Speech and Facial Animation" session.

http://s2017.siggraph.org/technical-papers/sessions/speech-and-facial-animation

Tero Karras, Timo Aila, Samuli Laine, Antti Herva, and Jaakko Lehtinen.

Audio-Driven Facial Animation by Joint End-to-End Learning of Pose and Emotion.

ACM Trans. Graph. 36, 4, Article 94 (July 2017).

http://research.nvidia.com/publication/2017-07_Audio-Driven-Facial-Animation

Supasorn Suwajanakorn, Steven M. Seitz, and Ira Kemelmacher-Shlizerman.

Synthesizing Obama: Learning Lip Sync from Audio.

ACM Trans. Graph. 36, 4, Article 95 (July 2017).

https://grail.cs.washington.edu/projects/AudioToObama/

Sarah Taylor, Taehwan Kim, Yisong Yue, Moshe Mahler, James Krahe, Anastasio Garcia Rodriguez, Jessica Hodgins, and Iain Matthews.

A Deep Learning Approach for Generalized Speech Animation.

ACM Trans. Graph. 36, 4, Article 93 (July 2017).

https://www.disneyresearch.com/publication/deep-learning-speech-animation/

Видео Speech and Facial Animation at SIGGRAPH 2017 -- comparison of technical papers канала Tero Karras FI

http://s2017.siggraph.org/technical-papers/sessions/speech-and-facial-animation

Tero Karras, Timo Aila, Samuli Laine, Antti Herva, and Jaakko Lehtinen.

Audio-Driven Facial Animation by Joint End-to-End Learning of Pose and Emotion.

ACM Trans. Graph. 36, 4, Article 94 (July 2017).

http://research.nvidia.com/publication/2017-07_Audio-Driven-Facial-Animation

Supasorn Suwajanakorn, Steven M. Seitz, and Ira Kemelmacher-Shlizerman.

Synthesizing Obama: Learning Lip Sync from Audio.

ACM Trans. Graph. 36, 4, Article 95 (July 2017).

https://grail.cs.washington.edu/projects/AudioToObama/

Sarah Taylor, Taehwan Kim, Yisong Yue, Moshe Mahler, James Krahe, Anastasio Garcia Rodriguez, Jessica Hodgins, and Iain Matthews.

A Deep Learning Approach for Generalized Speech Animation.

ACM Trans. Graph. 36, 4, Article 93 (July 2017).

https://www.disneyresearch.com/publication/deep-learning-speech-animation/

Видео Speech and Facial Animation at SIGGRAPH 2017 -- comparison of technical papers канала Tero Karras FI

Показать

Комментарии отсутствуют

Информация о видео

Другие видео канала

Production-Level Facial Performance Capture Using Deep Convolutional Neural Networks

Production-Level Facial Performance Capture Using Deep Convolutional Neural Networks Progressive Growing of GANs for Improved Quality, Stability, and Variation

Progressive Growing of GANs for Improved Quality, Stability, and Variation One hour of imaginary celebrities

One hour of imaginary celebrities A Style-Based Generator Architecture for Generative Adversarial Networks

A Style-Based Generator Architecture for Generative Adversarial Networks Audio-Driven Facial Animation by Joint End-to-End Learning of Pose and Emotion

Audio-Driven Facial Animation by Joint End-to-End Learning of Pose and Emotion Progressive Growing of GANs for Improved Quality, Stability, and Variation

Progressive Growing of GANs for Improved Quality, Stability, and Variation