Audio-Driven Facial Animation by Joint End-to-End Learning of Pose and Emotion

ACM Transactions on Graphics (Proc. SIGGRAPH 2017)

http://research.nvidia.com/publication/2017-07_Audio-Driven-Facial-Animation

Tero Karras (NVIDIA)

Timo Aila (NVIDIA)

Samuli Laine (NVIDIA)

Antti Herva (Remedy Entertainment)

Jaakko Lehtinen (NVIDIA and Aalto University)

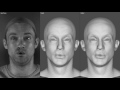

We present a machine learning technique for driving 3D facial animation by audio input in real time and with low latency. Our deep neural network learns a mapping from input waveforms to the 3D vertex coordinates of a face model, and simultaneously discovers a compact, latent code that disambiguates the variations in facial expression that cannot be explained by the audio alone. During inference, the latent code can be used as an intuitive control for the emotional state of the face puppet.

We train our network with 3-5 minutes of high-quality animation data obtained using traditional, vision-based performance capture methods. Even though our primary goal is to model the speaking style of a single actor, our model yields reasonable results even when driven with audio from other speakers with different gender, accent, or language, as we demonstrate with a user study. The results are applicable to in-game dialogue, low-cost localization, virtual reality avatars, and telepresence.

Видео Audio-Driven Facial Animation by Joint End-to-End Learning of Pose and Emotion канала Tero Karras FI

http://research.nvidia.com/publication/2017-07_Audio-Driven-Facial-Animation

Tero Karras (NVIDIA)

Timo Aila (NVIDIA)

Samuli Laine (NVIDIA)

Antti Herva (Remedy Entertainment)

Jaakko Lehtinen (NVIDIA and Aalto University)

We present a machine learning technique for driving 3D facial animation by audio input in real time and with low latency. Our deep neural network learns a mapping from input waveforms to the 3D vertex coordinates of a face model, and simultaneously discovers a compact, latent code that disambiguates the variations in facial expression that cannot be explained by the audio alone. During inference, the latent code can be used as an intuitive control for the emotional state of the face puppet.

We train our network with 3-5 minutes of high-quality animation data obtained using traditional, vision-based performance capture methods. Even though our primary goal is to model the speaking style of a single actor, our model yields reasonable results even when driven with audio from other speakers with different gender, accent, or language, as we demonstrate with a user study. The results are applicable to in-game dialogue, low-cost localization, virtual reality avatars, and telepresence.

Видео Audio-Driven Facial Animation by Joint End-to-End Learning of Pose and Emotion канала Tero Karras FI

Показать

Комментарии отсутствуют

Информация о видео

Другие видео канала

Production-Level Facial Performance Capture Using Deep Convolutional Neural Networks

Production-Level Facial Performance Capture Using Deep Convolutional Neural Networks Progressive Growing of GANs for Improved Quality, Stability, and Variation

Progressive Growing of GANs for Improved Quality, Stability, and Variation One hour of imaginary celebrities

One hour of imaginary celebrities A Style-Based Generator Architecture for Generative Adversarial Networks

A Style-Based Generator Architecture for Generative Adversarial Networks Progressive Growing of GANs for Improved Quality, Stability, and Variation

Progressive Growing of GANs for Improved Quality, Stability, and Variation Speech and Facial Animation at SIGGRAPH 2017 -- comparison of technical papers

Speech and Facial Animation at SIGGRAPH 2017 -- comparison of technical papers