Modern Hopfield Networks - Dr Sepp Hochreiter

Dr Sepp Hochreiter is a pioneer in the field of Artificial Intelligence (AI). He was the first to identify the key obstacle to Deep Learning and then discovered a general approach to address this challenge. He thus became the founding father of modern Deep Learning and AI.

Sepp Hochreiter is a founding director of IARAI, a professor at Johannes Kepler University Linz and a recipient of the 2020 IEEE Neural Networks Pioneer Award.

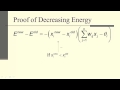

In a recent groundbreaking paper “Hopfield Networks is All You Need“, Sepp Hochreiter’s team introduced a new modern Hopfield network with continuous states that can store exponentially many patterns and has a very fast convergence.

Abstract: Associative memories are one of the earliest artificial neural models dating back to the 1960s and 1970s. Best known are Hopfield Networks, presented by John Hopfield in 1982. Recently, Modern Hopfield Networks have been introduced, which tremendously increase the storage capacity and converge extremely fast. This new Hopfield network can store exponentially (with the dimension) many patterns, converges with one update, and has exponentially small retrieval errors. The number of stored patterns is traded off against convergence speed and retrieval error. The new Hopfield network has three types of energy minima (fixed points of the update): (1) global fixed point averaging over all patterns, (2) metastable states averaging over a subset of patterns, and (3) fixed points which store a single pattern. Transformer and BERT models operate in their first layers preferably in the global averaging regime, while they operate in higher layers in metastable states. The gradient in transformers is maximal for metastable states, is uniformly distributed for global averaging, and vanishes for a fixed point near a stored pattern. Using the Hopfield network interpretation, we analyzed learning of transformer and BERT models. Learning starts with attention heads that average and then most of them switch to metastable states. However, the majority of heads in the first layers still averages and can be replaced by averaging, e.g. our proposed Gaussian weighting. In contrast, heads in the last layers steadily learn and seem to use metastable states to collect information created in lower layers. These heads seem to be a promising target for improving transformers. Neural networks with Hopfield networks outperform other methods on immune repertoire classification, where the Hopfield net stores several hundreds of thousands of patterns. We provide a new PyTorch layer called “Hopfield”, which allows to equip deep learning architectures with modern Hopfield networks as a new powerful concept comprising pooling, memory, and attention.

Subscribe to our newsletter and stay in the know:

https://www.iarai.ac.at/event-type/seminars/

_________

IARAI | Institute of Advanced Research in Artificial Intelligence

www.iarai.org

Видео Modern Hopfield Networks - Dr Sepp Hochreiter канала IARAI Research

Sepp Hochreiter is a founding director of IARAI, a professor at Johannes Kepler University Linz and a recipient of the 2020 IEEE Neural Networks Pioneer Award.

In a recent groundbreaking paper “Hopfield Networks is All You Need“, Sepp Hochreiter’s team introduced a new modern Hopfield network with continuous states that can store exponentially many patterns and has a very fast convergence.

Abstract: Associative memories are one of the earliest artificial neural models dating back to the 1960s and 1970s. Best known are Hopfield Networks, presented by John Hopfield in 1982. Recently, Modern Hopfield Networks have been introduced, which tremendously increase the storage capacity and converge extremely fast. This new Hopfield network can store exponentially (with the dimension) many patterns, converges with one update, and has exponentially small retrieval errors. The number of stored patterns is traded off against convergence speed and retrieval error. The new Hopfield network has three types of energy minima (fixed points of the update): (1) global fixed point averaging over all patterns, (2) metastable states averaging over a subset of patterns, and (3) fixed points which store a single pattern. Transformer and BERT models operate in their first layers preferably in the global averaging regime, while they operate in higher layers in metastable states. The gradient in transformers is maximal for metastable states, is uniformly distributed for global averaging, and vanishes for a fixed point near a stored pattern. Using the Hopfield network interpretation, we analyzed learning of transformer and BERT models. Learning starts with attention heads that average and then most of them switch to metastable states. However, the majority of heads in the first layers still averages and can be replaced by averaging, e.g. our proposed Gaussian weighting. In contrast, heads in the last layers steadily learn and seem to use metastable states to collect information created in lower layers. These heads seem to be a promising target for improving transformers. Neural networks with Hopfield networks outperform other methods on immune repertoire classification, where the Hopfield net stores several hundreds of thousands of patterns. We provide a new PyTorch layer called “Hopfield”, which allows to equip deep learning architectures with modern Hopfield networks as a new powerful concept comprising pooling, memory, and attention.

Subscribe to our newsletter and stay in the know:

https://www.iarai.ac.at/event-type/seminars/

_________

IARAI | Institute of Advanced Research in Artificial Intelligence

www.iarai.org

Видео Modern Hopfield Networks - Dr Sepp Hochreiter канала IARAI Research

Показать

Комментарии отсутствуют

Информация о видео

Другие видео канала

Lecture 11/16 : Hopfield nets and Boltzmann machines

Lecture 11/16 : Hopfield nets and Boltzmann machines Hopfield Networks is All You Need (Paper Explained)

Hopfield Networks is All You Need (Paper Explained) Spiking Neural Networks for More Efficient AI Algorithms

Spiking Neural Networks for More Efficient AI Algorithms Paper Review - GLOM: How to Represent Part-Whole Hierarchies in a Neural Network by Geoffrey Hinton

Paper Review - GLOM: How to Represent Part-Whole Hierarchies in a Neural Network by Geoffrey Hinton Hopfield Networks in 2021 - Fireside chat between Sepp Hochreiter and Dmitry Krotov | NeurIPS 2020

Hopfield Networks in 2021 - Fireside chat between Sepp Hochreiter and Dmitry Krotov | NeurIPS 2020 20: Hopfield Networks - Intro to Neural Computation

20: Hopfield Networks - Intro to Neural Computation

How Deep Neural Networks Work

How Deep Neural Networks Work What is a Hopfield Network?

What is a Hopfield Network? Machine Learning in Remote Sensing and Climate Research - Prof. Dr. Wouter Dorigo

Machine Learning in Remote Sensing and Climate Research - Prof. Dr. Wouter Dorigo Why I Don't Like Machine Learning

Why I Don't Like Machine Learning LSTM is dead. Long Live Transformers!

LSTM is dead. Long Live Transformers! Neural diffusion PDEs, differential geometry, and graph neural networks - Michael Bronstein

Neural diffusion PDEs, differential geometry, and graph neural networks - Michael Bronstein S18 Lecture 20: Hopfield Networks 1

S18 Lecture 20: Hopfield Networks 1 Emergence, dynamics, and behaviour - John Hopfield

Emergence, dynamics, and behaviour - John Hopfield Perceptron | Neural Networks

Perceptron | Neural Networks Illustrated Guide to Recurrent Neural Networks: Understanding the Intuition

Illustrated Guide to Recurrent Neural Networks: Understanding the Intuition MIT 6.S191 (2021): Recurrent Neural Networks

MIT 6.S191 (2021): Recurrent Neural Networks Geoffrey Hinton – Capsule Networks

Geoffrey Hinton – Capsule Networks Hopfield Networks

Hopfield Networks