Introduction To Optimization: Gradients, Constraints, Continuous and Discrete Variables

A brief introduction to the concepts of gradients, constraints, and the differences between continuous and discrete variables. This video is part of an introductory optimization series.

NOTE: There is a typo in the slope formula at at 00:30. It should be delta_y/delta_x.

TRANSCRIPT:

Hello, and welcome to Introduction To Optimization. This video continues our discussion of basic optimization vocabulary.

In the previous video we covered Objective functions and Decision variables. This video will cover gradients, constraints, continuous variables and discrete variables.

Gradients/derivatives

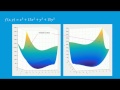

Derivatives and gradients describe the slope of a function, whether it increases or decreases in a given direction. In multiple directions (or dimensions) this is called the gradient. In optimization, we take the gradient of the objective function, and also of any constraints. Gradients help the solver know which direction to go to find the optimum value. Finding gradients for a function is an important part of optimization, and can be accomplished in a number of ways including analytic differentiation, numerical differentiation, and automatic differentiation. Most optimization packages include methods for finding function gradients.

Constraints

Constraints describe where the optimizer cannot go, or additional conditions that must be met for a successful solution. For example, when optimizing the size of a square we could add the constraint that the lengths of the two sides multiplied together is less than five. This is an inequality constraint. We can also add equality constraints, such as a + b = 10, or a*b/(a+b) = 3. Other examples of constraints might be a bridge with a constraint that it must hold at least 80,000 pounds, or a chemical mixture that must be at least 99 percent pure. Optimal solutions tend to be right up against the constraints. Picture a ball rolling downhill, and encountering a wall.

Continuous versus Binary / Integer / Discrete variables

One important distinction to note in optimization is that between continuous and discrete, binary or integer variables. Many variables can be represented as continuous range of values, such as size, weight, speed, or concentration. For example, a car can go 5 mph, 10 mph, or anywhere in between. However, other variables can only be represented by discrete quantities, such as the number of holes that should be drilled in a board, or a pipe diameter chosen from available models. Theses are often called integer variables. Other variables are best represented by binary values, 1 or 0, such as whether a switch is on or off. The types of variables involved in a problem affect the optimization methods that can be used. In general, optimization problems involving binary or integer variables are much more difficult than continuous problems because they produce discontinuous derivatives.

Summary

Gradients / Derivatives

Derivatives and gradients describe the slope of a function and help a solver find the optimum.

Constraints

Constraints tell the solver where it cannot go. They often represent physical limitations of a system.

Continuous, discrete or integer, and binary variables create different types of optimization problems that must be solved using different approaches. Discrete problems are often more difficult for optimizers to solve.

Видео Introduction To Optimization: Gradients, Constraints, Continuous and Discrete Variables канала AlphaOpt

NOTE: There is a typo in the slope formula at at 00:30. It should be delta_y/delta_x.

TRANSCRIPT:

Hello, and welcome to Introduction To Optimization. This video continues our discussion of basic optimization vocabulary.

In the previous video we covered Objective functions and Decision variables. This video will cover gradients, constraints, continuous variables and discrete variables.

Gradients/derivatives

Derivatives and gradients describe the slope of a function, whether it increases or decreases in a given direction. In multiple directions (or dimensions) this is called the gradient. In optimization, we take the gradient of the objective function, and also of any constraints. Gradients help the solver know which direction to go to find the optimum value. Finding gradients for a function is an important part of optimization, and can be accomplished in a number of ways including analytic differentiation, numerical differentiation, and automatic differentiation. Most optimization packages include methods for finding function gradients.

Constraints

Constraints describe where the optimizer cannot go, or additional conditions that must be met for a successful solution. For example, when optimizing the size of a square we could add the constraint that the lengths of the two sides multiplied together is less than five. This is an inequality constraint. We can also add equality constraints, such as a + b = 10, or a*b/(a+b) = 3. Other examples of constraints might be a bridge with a constraint that it must hold at least 80,000 pounds, or a chemical mixture that must be at least 99 percent pure. Optimal solutions tend to be right up against the constraints. Picture a ball rolling downhill, and encountering a wall.

Continuous versus Binary / Integer / Discrete variables

One important distinction to note in optimization is that between continuous and discrete, binary or integer variables. Many variables can be represented as continuous range of values, such as size, weight, speed, or concentration. For example, a car can go 5 mph, 10 mph, or anywhere in between. However, other variables can only be represented by discrete quantities, such as the number of holes that should be drilled in a board, or a pipe diameter chosen from available models. Theses are often called integer variables. Other variables are best represented by binary values, 1 or 0, such as whether a switch is on or off. The types of variables involved in a problem affect the optimization methods that can be used. In general, optimization problems involving binary or integer variables are much more difficult than continuous problems because they produce discontinuous derivatives.

Summary

Gradients / Derivatives

Derivatives and gradients describe the slope of a function and help a solver find the optimum.

Constraints

Constraints tell the solver where it cannot go. They often represent physical limitations of a system.

Continuous, discrete or integer, and binary variables create different types of optimization problems that must be solved using different approaches. Discrete problems are often more difficult for optimizers to solve.

Видео Introduction To Optimization: Gradients, Constraints, Continuous and Discrete Variables канала AlphaOpt

Показать

Комментарии отсутствуют

Информация о видео

Другие видео канала

Introduction To Optimization: Gradient Based Algorithms

Introduction To Optimization: Gradient Based Algorithms What is the Traveling Salesman Problem?

What is the Traveling Salesman Problem? ❖ Optimization Problem #1 ❖

❖ Optimization Problem #1 ❖ MATLAB Nonlinear Optimization with fmincon

MATLAB Nonlinear Optimization with fmincon Python Scipy Optimization Example: Constrained Box Volume

Python Scipy Optimization Example: Constrained Box Volume The Best and Worst Prediction in Science

The Best and Worst Prediction in Science Gradient and graphs

Gradient and graphs Introduction To Optimization: Objective Functions and Decision Variables

Introduction To Optimization: Objective Functions and Decision Variables Robust optimization

Robust optimization Discrete and Continuous Data

Discrete and Continuous Data Introduction to Optimization | What is optimization?

Introduction to Optimization | What is optimization? 11 Secrets to Memorize Things Quicker Than Others

11 Secrets to Memorize Things Quicker Than Others Matlab Fmincon Optimization Example: Constrained Box Volume

Matlab Fmincon Optimization Example: Constrained Box Volume Introduction To Optimization: Gradient Free Algorithms (1/2) - Genetic - Particle Swarm

Introduction To Optimization: Gradient Free Algorithms (1/2) - Genetic - Particle Swarm How To Learn Anything Faster - 5 Tips to Increase your Learning Speed (Feat. Project Better Self)

How To Learn Anything Faster - 5 Tips to Increase your Learning Speed (Feat. Project Better Self) Nonlinear Optimization

Nonlinear Optimization Discrete and continuous random variables | Probability and Statistics | Khan Academy

Discrete and continuous random variables | Probability and Statistics | Khan Academy Understand Calculus in 10 Minutes

Understand Calculus in 10 Minutes Anna Nicanorova: Optimizing Life Everyday Problems Solved with Linear Programing in Python

Anna Nicanorova: Optimizing Life Everyday Problems Solved with Linear Programing in Python Applied Optimization - Evolution Algorithm

Applied Optimization - Evolution Algorithm