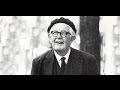

Complete Statistical Theory of Learning (Vladimir Vapnik) | MIT Deep Learning Series

Lecture by Vladimir Vapnik in January 2020, part of the MIT Deep Learning Lecture Series.

Slides: http://bit.ly/2ORVofC

Associated podcast conversation: https://www.youtube.com/watch?v=bQa7hpUpMzM

Series website: https://deeplearning.mit.edu

Playlist: http://bit.ly/deep-learning-playlist

OUTLINE:

0:00 - Introduction

0:46 - Overview: Complete Statistical Theory of Learning

3:47 - Part 1: VC Theory of Generalization

11:04 - Part 2: Target Functional for Minimization

27:13 - Part 3: Selection of Admissible Set of Functions

37:26 - Part 4: Complete Solution in Reproducing Kernel Hilbert Space (RKHS)

53:16 - Part 5: LUSI Approach in Neural Networks

59:28 - Part 6: Examples of Predicates

1:10:39 - Conclusion

1:16:10 - Q&A: Overfitting

1:17:18 - Q&A: Language

CONNECT:

- If you enjoyed this video, please subscribe to this channel.

- Twitter: https://twitter.com/lexfridman

- LinkedIn: https://www.linkedin.com/in/lexfridman

- Facebook: https://www.facebook.com/lexfridman

- Instagram: https://www.instagram.com/lexfridman

Видео Complete Statistical Theory of Learning (Vladimir Vapnik) | MIT Deep Learning Series канала Lex Fridman

Slides: http://bit.ly/2ORVofC

Associated podcast conversation: https://www.youtube.com/watch?v=bQa7hpUpMzM

Series website: https://deeplearning.mit.edu

Playlist: http://bit.ly/deep-learning-playlist

OUTLINE:

0:00 - Introduction

0:46 - Overview: Complete Statistical Theory of Learning

3:47 - Part 1: VC Theory of Generalization

11:04 - Part 2: Target Functional for Minimization

27:13 - Part 3: Selection of Admissible Set of Functions

37:26 - Part 4: Complete Solution in Reproducing Kernel Hilbert Space (RKHS)

53:16 - Part 5: LUSI Approach in Neural Networks

59:28 - Part 6: Examples of Predicates

1:10:39 - Conclusion

1:16:10 - Q&A: Overfitting

1:17:18 - Q&A: Language

CONNECT:

- If you enjoyed this video, please subscribe to this channel.

- Twitter: https://twitter.com/lexfridman

- LinkedIn: https://www.linkedin.com/in/lexfridman

- Facebook: https://www.facebook.com/lexfridman

- Instagram: https://www.instagram.com/lexfridman

Видео Complete Statistical Theory of Learning (Vladimir Vapnik) | MIT Deep Learning Series канала Lex Fridman

Показать

Комментарии отсутствуют

Информация о видео

Другие видео канала

Deep Learning State of the Art (2020)

Deep Learning State of the Art (2020) Stuart Russell: Long-Term Future of Artificial Intelligence | Lex Fridman Podcast #9

Stuart Russell: Long-Term Future of Artificial Intelligence | Lex Fridman Podcast #9 Vladimir Vapnik: Predicates, Invariants, and the Essence of Intelligence | Lex Fridman Podcast #71

Vladimir Vapnik: Predicates, Invariants, and the Essence of Intelligence | Lex Fridman Podcast #71 Tamara Louie: Applying Statistical Modeling & Machine Learning to Perform Time-Series Forecasting

Tamara Louie: Applying Statistical Modeling & Machine Learning to Perform Time-Series Forecasting 2017 Personality 06: Jean Piaget & Constructivism

2017 Personality 06: Jean Piaget & Constructivism Vitalik Buterin: Ethereum 2.0 | Lex Fridman Podcast #188

Vitalik Buterin: Ethereum 2.0 | Lex Fridman Podcast #188 PAC Learning and VC Dimension

PAC Learning and VC Dimension Model Complexity and VC Dimension

Model Complexity and VC Dimension Stanford Seminar - Information Theory of Deep Learning

Stanford Seminar - Information Theory of Deep Learning The Statistics Debate! - October, 2020

The Statistics Debate! - October, 2020 Statistical learning with big data. A talk by Trevor Hastie

Statistical learning with big data. A talk by Trevor Hastie Donald Knuth: P=NP | AI Podcast Clips

Donald Knuth: P=NP | AI Podcast Clips Yoshua Bengio Guest Talk - Towards Causal Representation Learning

Yoshua Bengio Guest Talk - Towards Causal Representation Learning David Sinclair: Extending the Human Lifespan Beyond 100 Years | Lex Fridman Podcast #189

David Sinclair: Extending the Human Lifespan Beyond 100 Years | Lex Fridman Podcast #189 Artificial Intelligence & Machine Learning in the Oil & Gas Industry | Zoom Webinar Recording

Artificial Intelligence & Machine Learning in the Oil & Gas Industry | Zoom Webinar Recording Lecture 02 - Is Learning Feasible?

Lecture 02 - Is Learning Feasible? MIT 6.S191: Recurrent Neural Networks

MIT 6.S191: Recurrent Neural Networks Communication Theory & Systems : RONNY HADANI

Communication Theory & Systems : RONNY HADANI Machine Learning course- Shai Ben-David: Lecture 7

Machine Learning course- Shai Ben-David: Lecture 7 Michael I. Jordan: Machine Learning, Recommender Systems, and Future of AI | Lex Fridman Podcast #74

Michael I. Jordan: Machine Learning, Recommender Systems, and Future of AI | Lex Fridman Podcast #74