Type I and II Errors, Power, Effect Size, Significance and Power Analysis in Quantitative Research

There is a mistake at 9.22. Alpha is normally set to 0.05 NOT 0.5. Thank you Victoria for bringing this to my attention.

This video reviews key terminology relating to type I and II errors along with examples. Then considerations of Power, Effect Size, Significance and Power Analysis in Quantitative Research are briefly reviewed. http://youstudynursing.com/

Research eBook on Amazon: http://amzn.to/1hB2eBd

Check out the links below and SUBSCRIBE for more youtube.com/user/NurseKillam

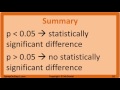

Quantitative research is driven by research questions and hypotheses. For every hypothesis there is an unstated null hypothesis. The null hypothesis does not need to be explicitly stated because it is always the opposite of the hypothesis. In order to demonstrate that a hypothesis is likely true researchers need to compare it to the opposite situation. The research hypothesis will be about some kind of relationship between variables. The null hypothesis is the assertion that the variables being tested are not related and the results are the product of random chance events. Remember that null is kind of like no so a null hypothesis means there is no relationship.

For example, if a researcher asks the question "Does having class for 12 hours in one day lead to nursing student burnout?"

The hypothesis would indicate the researcher's best guess of the results: "A 12 hour day of classes causes nursing students to burn out."

Therefore the null hypothesis would be that "12 hours of class in one day has nothing to do with student burnout."

The only way of backing up a hypothesis is to refute the null hypothesis. Instead of trying to prove the hypothesis that 12 hours of class causes burnout the researcher must show that the null hypothesis is likely to be wrong. This rule means assuming that there is not relationship until there is evidence to the contrary.

In every study there is a chance for error. There are two major types of error in quantitative research -- type 1 and 2. Logically, since they are defined as errors, both types of error focus on mistakes the researcher may make. Sometimes talking about type 1 and type 2 errors can be mentally tricky because it seems like you are talking in double and even triple negatives. It is because both type 1 and 2 errors are defined according to the researcher's decision regarding the null hypothesis, which assumes no relationship among variables.

Instead of remembering the entire definition of each type of error just remember which type has to do with rejecting and which one is about accepting the null hypothesis.

A type I error occurs when the researcher mistakenly rejects the null hypothesis. If the null hypothesis is rejected it means that the researcher has found a relationship among variables. So a type I error happens when there is no relationship but the researcher finds one.

A type II error is the opposite. A type II error occurs when the researcher mistakenly accepts the null hypothesis. If the null hypothesis is accepted it means that the researcher has not found a relationship among variables. So a type II error happens when there is a relationship but the researcher does not find it.

To remember the difference between these errors think about a stubborn person. Remember that your first instinct as a researcher may be to reject the null hypothesis because you want your prediction of an existing relationship to be correct. If you decide that your hypothesis is right when you are actually wrong a type I error has occurred.

A type II error happens when you decide your prediction is wrong when you are actually right.

One way to help you remember the meaning of type 1 and 2 error is to find an example or analogy that helps you remember. As a nurse you may identify most with the idea of thinking about medical tests. A lot of teachers use the analogy of a court room when explaining type 1 and 2 errors. I thought students may appreciate our example study analogy regarding class schedules.

It is impossible to know for sure when an error occurs, but researchers can control the likelihood of making an error in statistical decision making. The likelihood of making an error is related to statistical considerations that are used to determine the needed sample size for a study.

When determining a sample size researchers need to consider the desired Power, expected Effect Size and the acceptable Significance level.

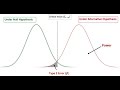

Power is the probability that the researcher will make a correct decision to reject the null hypothesis when it is in reality false, therefore, avoiding a type II error. It refers to the probability that your test will find a statistically significant difference when such a difference actually exists. Another way to think about it is the ability of a test to detect an effect if the effect really exists.

The more power a study has the lower the risk of a type II error is. If power is low the risk of a type II error is high. ...

Видео Type I and II Errors, Power, Effect Size, Significance and Power Analysis in Quantitative Research канала NurseKillam

This video reviews key terminology relating to type I and II errors along with examples. Then considerations of Power, Effect Size, Significance and Power Analysis in Quantitative Research are briefly reviewed. http://youstudynursing.com/

Research eBook on Amazon: http://amzn.to/1hB2eBd

Check out the links below and SUBSCRIBE for more youtube.com/user/NurseKillam

Quantitative research is driven by research questions and hypotheses. For every hypothesis there is an unstated null hypothesis. The null hypothesis does not need to be explicitly stated because it is always the opposite of the hypothesis. In order to demonstrate that a hypothesis is likely true researchers need to compare it to the opposite situation. The research hypothesis will be about some kind of relationship between variables. The null hypothesis is the assertion that the variables being tested are not related and the results are the product of random chance events. Remember that null is kind of like no so a null hypothesis means there is no relationship.

For example, if a researcher asks the question "Does having class for 12 hours in one day lead to nursing student burnout?"

The hypothesis would indicate the researcher's best guess of the results: "A 12 hour day of classes causes nursing students to burn out."

Therefore the null hypothesis would be that "12 hours of class in one day has nothing to do with student burnout."

The only way of backing up a hypothesis is to refute the null hypothesis. Instead of trying to prove the hypothesis that 12 hours of class causes burnout the researcher must show that the null hypothesis is likely to be wrong. This rule means assuming that there is not relationship until there is evidence to the contrary.

In every study there is a chance for error. There are two major types of error in quantitative research -- type 1 and 2. Logically, since they are defined as errors, both types of error focus on mistakes the researcher may make. Sometimes talking about type 1 and type 2 errors can be mentally tricky because it seems like you are talking in double and even triple negatives. It is because both type 1 and 2 errors are defined according to the researcher's decision regarding the null hypothesis, which assumes no relationship among variables.

Instead of remembering the entire definition of each type of error just remember which type has to do with rejecting and which one is about accepting the null hypothesis.

A type I error occurs when the researcher mistakenly rejects the null hypothesis. If the null hypothesis is rejected it means that the researcher has found a relationship among variables. So a type I error happens when there is no relationship but the researcher finds one.

A type II error is the opposite. A type II error occurs when the researcher mistakenly accepts the null hypothesis. If the null hypothesis is accepted it means that the researcher has not found a relationship among variables. So a type II error happens when there is a relationship but the researcher does not find it.

To remember the difference between these errors think about a stubborn person. Remember that your first instinct as a researcher may be to reject the null hypothesis because you want your prediction of an existing relationship to be correct. If you decide that your hypothesis is right when you are actually wrong a type I error has occurred.

A type II error happens when you decide your prediction is wrong when you are actually right.

One way to help you remember the meaning of type 1 and 2 error is to find an example or analogy that helps you remember. As a nurse you may identify most with the idea of thinking about medical tests. A lot of teachers use the analogy of a court room when explaining type 1 and 2 errors. I thought students may appreciate our example study analogy regarding class schedules.

It is impossible to know for sure when an error occurs, but researchers can control the likelihood of making an error in statistical decision making. The likelihood of making an error is related to statistical considerations that are used to determine the needed sample size for a study.

When determining a sample size researchers need to consider the desired Power, expected Effect Size and the acceptable Significance level.

Power is the probability that the researcher will make a correct decision to reject the null hypothesis when it is in reality false, therefore, avoiding a type II error. It refers to the probability that your test will find a statistically significant difference when such a difference actually exists. Another way to think about it is the ability of a test to detect an effect if the effect really exists.

The more power a study has the lower the risk of a type II error is. If power is low the risk of a type II error is high. ...

Видео Type I and II Errors, Power, Effect Size, Significance and Power Analysis in Quantitative Research канала NurseKillam

Показать

Комментарии отсутствуют

Информация о видео

Другие видео канала

Power & Effect Size

Power & Effect Size Null Hypothesis, p-Value, Statistical Significance, Type 1 Error and Type 2 Error

Null Hypothesis, p-Value, Statistical Significance, Type 1 Error and Type 2 Error

Effect size

Effect size What do my results mean Effect size is not the same as statistical significance. With Tom Reader.

What do my results mean Effect size is not the same as statistical significance. With Tom Reader. How to Critique the Relevance, Wording and Congruence of Research Questions

How to Critique the Relevance, Wording and Congruence of Research Questions Calculating Power and the Probability of a Type II Error (A Two-Tailed Example)

Calculating Power and the Probability of a Type II Error (A Two-Tailed Example) Power, Type II error, and Sample Size

Power, Type II error, and Sample Size Nursing Research - How to Critique an Article

Nursing Research - How to Critique an Article Type I error vs Type II error

Type I error vs Type II error Type 1 errors | Type 2 errors | STATISTICAL POWER

Type 1 errors | Type 2 errors | STATISTICAL POWER How to outline a persuasive thesis essay or paper

How to outline a persuasive thesis essay or paper Intro to Hypothesis Testing in Statistics - Hypothesis Testing Statistics Problems & Examples

Intro to Hypothesis Testing in Statistics - Hypothesis Testing Statistics Problems & Examples Degrees of Freedom and Effect Sizes: Crash Course Statistics #28

Degrees of Freedom and Effect Sizes: Crash Course Statistics #28 Power of the test, p-values, publication bias and statistical evidence

Power of the test, p-values, publication bias and statistical evidence Power and Sample Size Calculation

Power and Sample Size Calculation Inclusion and Exclusion Criteria

Inclusion and Exclusion Criteria Type I Errors, Type II Errors, and the Power of the Test

Type I Errors, Type II Errors, and the Power of the Test P-values and Type I Error

P-values and Type I Error Calculating Sample Size with Power Analysis

Calculating Sample Size with Power Analysis