JAX: accelerated machine learning research via composable function transformations in Python

JAX is a system for high-performance machine learning research and numerical computing. It offers the familiarity of Python+NumPy together with hardware acceleration, and it enables the definition and composition of user-wielded function transformations useful for machine learning programs. These transformations include automatic differentiation, automatic batching, end-to-end compilation (via XLA), parallelizing over multiple accelerators, and more. Composing these transformations is the key to JAX’s power and simplicity.

JAX had its initial open-source release in December 2018 (https://github.com/google/jax). It’s used by researchers for a wide range of advanced applications, from studying training dynamics of neural networks, to probabilistic programming, to scientific applications in physics and biology.

Presented by Matthew Johnson

Видео JAX: accelerated machine learning research via composable function transformations in Python канала ACM SIGPLAN

JAX had its initial open-source release in December 2018 (https://github.com/google/jax). It’s used by researchers for a wide range of advanced applications, from studying training dynamics of neural networks, to probabilistic programming, to scientific applications in physics and biology.

Presented by Matthew Johnson

Видео JAX: accelerated machine learning research via composable function transformations in Python канала ACM SIGPLAN

Показать

Комментарии отсутствуют

Информация о видео

Другие видео канала

EI Seminar - Matthew Johnson - JAX: accelerated ML research via composable function transformations

EI Seminar - Matthew Johnson - JAX: accelerated ML research via composable function transformations Python Rich - The BEST way to add Colors, Emojis, Tables and More...

Python Rich - The BEST way to add Colors, Emojis, Tables and More... JAX: Accelerated Machine Learning Research | SciPy 2020 | VanderPlas

JAX: Accelerated Machine Learning Research | SciPy 2020 | VanderPlas Bioinformatics Project from Scratch - Drug Discovery Part 1 (Data Collection and Pre-Processing)

Bioinformatics Project from Scratch - Drug Discovery Part 1 (Data Collection and Pre-Processing) Jake VanderPlas - How to Think about Data Visualization - PyCon 2019

Jake VanderPlas - How to Think about Data Visualization - PyCon 2019 Automatic Differentiation Explained with Example

Automatic Differentiation Explained with Example "High performance machine learning with JAX" - Mat Kelcey (PyConline AU 2021)

"High performance machine learning with JAX" - Mat Kelcey (PyConline AU 2021) Watch this before buying CodeAcademy

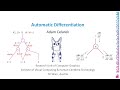

Watch this before buying CodeAcademy Automatic Differentiation

Automatic Differentiation Christoph Deil - Understanding Numba - the Python and Numpy compiler

Christoph Deil - Understanding Numba - the Python and Numpy compiler Top 18 Most Useful Python Modules

Top 18 Most Useful Python Modules A Day in the Life of a Harvard Computer Science Student

A Day in the Life of a Harvard Computer Science Student Intro to JAX: Accelerating Machine Learning research

Intro to JAX: Accelerating Machine Learning research Solving Systems Of Equations Using Sympy And Numpy (Python)

Solving Systems Of Equations Using Sympy And Numpy (Python) Joel Grus: Learning Data Science Using Functional Python

Joel Grus: Learning Data Science Using Functional Python 10 Things I Wish I Knew Before Coding Bootcamp

10 Things I Wish I Knew Before Coding Bootcamp Introduction to JAX

Introduction to JAX Machine Learning with JAX - From Zero to Hero | Tutorial #1

Machine Learning with JAX - From Zero to Hero | Tutorial #1 The 7 steps of machine learning

The 7 steps of machine learning Daniel Kirsch - Functional Programming in Python

Daniel Kirsch - Functional Programming in Python