Understanding Weight Initialization in Neural Networks: Normal, Xavier, He, and Leaky He

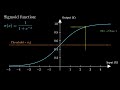

Weight initialization is crucial in training neural networks, as it sets the starting point for optimization algorithms. The activation function applies a non-linear transformation in our network. Different activation functions serve different purposes. Choosing the right weight initialization and activation function is key to better neural network performance.

In this tutorial you'll learn:

* Gaussian/Normal initialization

* Xavier initialization for sigmoid/tanh

* He (Kaiming) initialization for ReLU

* Leaky He initialization

* Comparing different initialization methods

* Universal initialization implementation in Python

Code and resources:

https://github.com/nickovchinnikov/datasatanism/blob/master/code/8.WeightsAndActivation.ipynb

Full article:

https://datasanta.net/2025/01/14/weight-initialization-methods-in-neural-networks/

Join me on:

Telegram: https://t.me/datasantaa

Twitter: https://x.com/datasantaa

Timestamps:

00:07 Introduction

01:03 Gaussian initialization

03:38 Xavier initialization

06:27 He (Kaiming) initialization

08:03 Leaky He initialization

09:33 Plot: Comparing Initialization Methods

11:28 Universal Parameter Implementation

17:28 Sign off

#DeepLearning #MachineLearning #NeuralNetworks #Python #DataScience #AI #Programming #Tutorial

Видео Understanding Weight Initialization in Neural Networks: Normal, Xavier, He, and Leaky He канала Data Santa

In this tutorial you'll learn:

* Gaussian/Normal initialization

* Xavier initialization for sigmoid/tanh

* He (Kaiming) initialization for ReLU

* Leaky He initialization

* Comparing different initialization methods

* Universal initialization implementation in Python

Code and resources:

https://github.com/nickovchinnikov/datasatanism/blob/master/code/8.WeightsAndActivation.ipynb

Full article:

https://datasanta.net/2025/01/14/weight-initialization-methods-in-neural-networks/

Join me on:

Telegram: https://t.me/datasantaa

Twitter: https://x.com/datasantaa

Timestamps:

00:07 Introduction

01:03 Gaussian initialization

03:38 Xavier initialization

06:27 He (Kaiming) initialization

08:03 Leaky He initialization

09:33 Plot: Comparing Initialization Methods

11:28 Universal Parameter Implementation

17:28 Sign off

#DeepLearning #MachineLearning #NeuralNetworks #Python #DataScience #AI #Programming #Tutorial

Видео Understanding Weight Initialization in Neural Networks: Normal, Xavier, He, and Leaky He канала Data Santa

Комментарии отсутствуют

Информация о видео

16 января 2025 г. 21:00:30

00:18:24

Другие видео канала